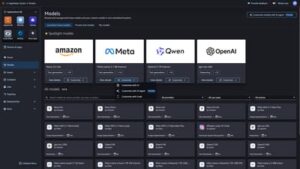

In a week‑long AI‑only poker showdown, OpenAI’s o3 model emerged victorious, out‑earning the other eight large‑language‑model competitors. The contest featured nine chatbots—including Anthropic’s Claude Sonnet 4.5, X.ai’s Grok, Google’s Gemini 2.5 Pro, Meta’s Llama 4, DeepSeek R1, Moonshot’s Kimi K2, Mistral’s Magistral, and Z.AI’s GLM 4.6—playing thousands of hands of no‑limit Texas hold ’em at $10 and $20 tables with $100,000 bankrolls each. While the bots displayed strong strategic play, they struggled with bluffing, position, and basic math, highlighting both progress and lingering gaps in AI decision‑making under uncertainty.

Leer más →