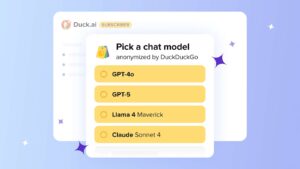

DuckDuckGo has upgraded its privacy‑focused subscription plan to give members access to a range of cutting‑edge AI models without additional fees. The plan, which already bundles a VPN service, personal information removal, and identity theft restoration, now includes models such as Anthropic’s Claude 3.5 Haiku, Meta’s Llama 4 Scout, Mistral AI’s Mistral Small 3 24B, and OpenAI’s GPT‑4o mini. Users on the $9.99‑per‑month tier will also be able to use newer models like GPT‑4o, GPT‑5, Claude Sonnet 4, and Llama Maverick, offering more nuanced responses while maintaining DuckDuckGo’s privacy emphasis.

Leer más →