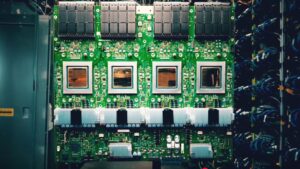

A side‑by‑side look at three leading AI accelerators—Huawei's Ascend 950 series, Nvidia's H200 (GH100 Hopper), and AMD's Radeon Instinct MI300 (Aqua Vanjaram). The comparison covers architecture, process technology, transistor counts, die size, memory type and capacity, bandwidth, compute performance across FP8, FP16, FP32 and FP64, and target scenarios such as large‑scale LLM training, inference, and high‑performance computing. Availability timelines differ, with each vendor positioning its chip for data‑center and HPC workloads.

Leer más →