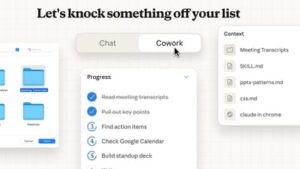

Anthropic introduced Claude Cowork, a new AI‑agent capability for its Claude chatbot, as a research preview available in the macOS app for Claude Max subscribers. The feature lets users grant Claude access to local folders so it can read, edit, or create files, handling tasks such as reorganizing downloads, generating spreadsheets, or drafting reports. Claude Cowork also integrates with services like Asana, Notion, PayPal, and Chrome, offering continuous updates and parallel task execution. Anthropic highlighted safety concerns, noting the model’s ability to delete files and the risk of prompt‑injection attacks, and urged users to join a waitlist if they are not yet subscribers.

Leer más →