Google Unveils Ironwood TPU with Record 1.77PB Shared Memory

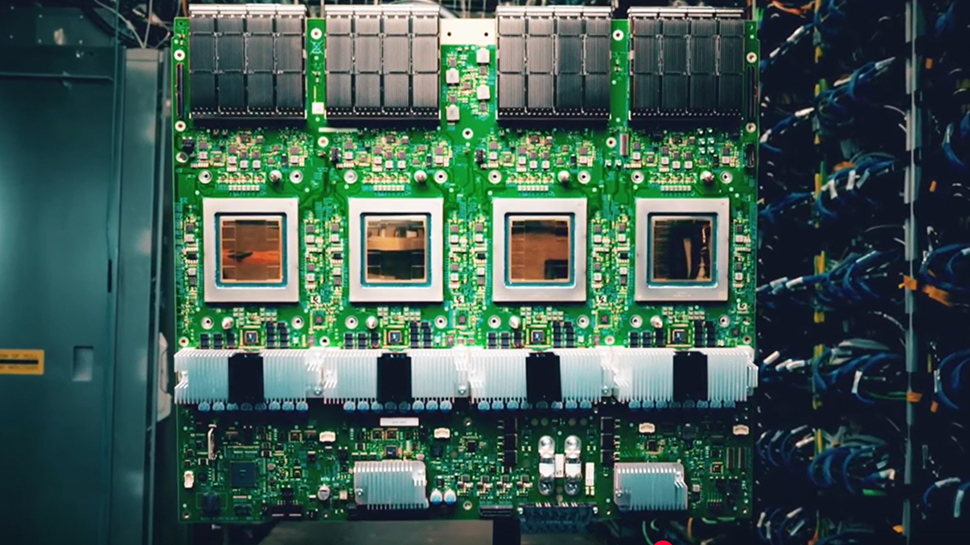

Google revealed its latest Tensor Processing Unit, named Ironwood, as the company’s first TPU built primarily for massive inference workloads rather than training. The chip integrates two compute dies, each delivering 4,614 TFLOPs of FP8 performance. Eight stacks of HBM3e memory provide 192 GB per chip, delivering 7.3 TB/s bandwidth. The dual‑die design enables the system to scale without glue logic, supporting up to 9,216 chips per pod.

Record‑Setting Shared Memory

When fully assembled, the Ironwood pod offers 1.77 PB of directly addressable HBM memory, setting a new world record for shared‑memory supercomputers. The massive memory pool is linked via optical circuit switches that connect the racks, allowing the system to maintain high bandwidth while scaling.

Performance and Efficiency

Across the full pod, the configuration reaches 42.5 exaflops of performance. Google claims a two‑fold improvement in performance per watt compared with its previous generation, Trillium, thanks to dynamic voltage‑frequency scaling and a cold‑plate liquid‑cooling solution that leverages the company’s third‑generation cooling infrastructure.

Reliability, Availability, and Serviceability (RAS)

Ironwood incorporates several on‑chip reliability features, including a root of trust, built‑in self‑test functions, and mechanisms to mitigate silent data corruption. Logic‑repair functions improve manufacturing yield, and the system can reconfigure around failed nodes, restoring workloads from checkpoints.

AI‑Assisted Design and SparseCore

Google used AI techniques to optimize the ALU circuits and floor plan of the Ironwood chip. A fourth‑generation SparseCore is added to accelerate embeddings and collective operations, targeting workloads such as recommendation engines.

Deployment and Availability

Google has begun deploying Ironwood in its hyperscale cloud data centers, though the TPU remains an internal platform not directly offered to external customers. The design reflects Google’s long‑term strategy to build high‑end AI compute across chip, interconnect, and physical infrastructure layers.

Used: News Factory APP - news discovery and automation - ChatGPT for Business