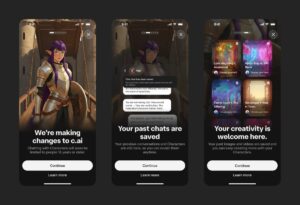

A recent roundup highlights eight lesser‑known AI applications that go beyond chatbots. From Merlin, which identifies birds from photos or calls, to Goblin.Tools, a suite designed for neurodivergent users, each tool addresses a specific need. OpusClip automates video clipping for social media, while Rewind.ai records and indexes everything on a Mac for easy retrieval. Be My AI assists blind or low‑vision users by describing surroundings in real time. Character.AI lets users converse with fictional or historical personalities, Gamma generates polished presentation slides, and SciSpace Agent serves as a research assistant for scholars. Together, they illustrate how AI is expanding into practical, niche domains.

Read more →