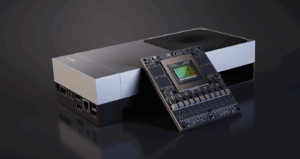

NVIDIA announced the Jetson AGX Thor, the latest generation of its robot‑brain platform. Built on the new Blackwell GPU architecture, Thor delivers roughly 7.5 times the AI compute and 3.5 times the energy efficiency of the prior Jetson Orin. The system‑on‑module supports generative AI models, enabling robots to interpret visual and language data in real time. Development kits are priced at $3,499, while production‑grade T5000 modules are offered wholesale at $2,999 for large orders. Existing customers such as Amazon, Meta, Agility Robotics, and Boston Dynamics are expected to adopt the new hardware for advanced physical‑AI applications.

Read more →