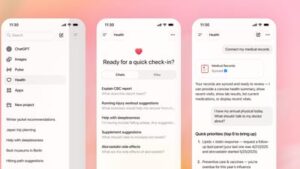

ChatGPT offers convenience for many everyday tasks, but it falls short in critical areas such as health diagnoses, mental‑health support, emergency safety decisions, personalized finance or tax advice, handling confidential data, illegal activities, academic cheating, real‑time news monitoring, gambling, legal document drafting, and artistic creation. While it can provide general information and brainstorming assistance, relying on it for these high‑stakes matters can lead to serious consequences. Users are urged to treat the AI as a supplemental tool and seek professional expertise where accuracy, legality, or personal safety is at stake.

Leia mais →