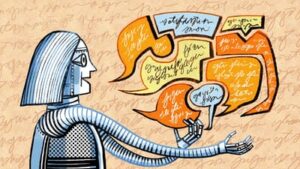

Humans&, a startup founded by veterans of Anthropic, Meta, OpenAI, xAI, and Google DeepMind, is building a foundation model focused on social intelligence and team coordination. The company raised a large seed round to develop a “central nervous system” that can help people collaborate, make group decisions, and interact with AI in a more conversational way. The model will be trained with long‑horizon and multi‑agent reinforcement learning to remember users, understand motivations, and act as connective tissue across organizations. While the product is still in development, the team aims to own the collaboration layer rather than plug into existing tools.

Leia mais →