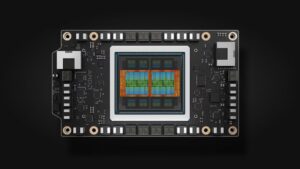

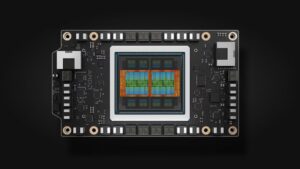

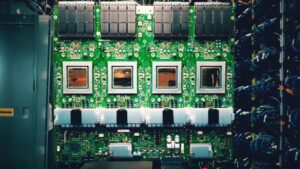

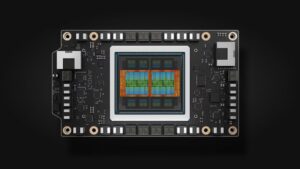

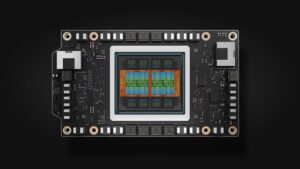

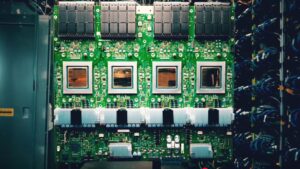

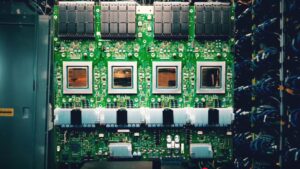

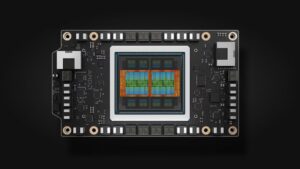

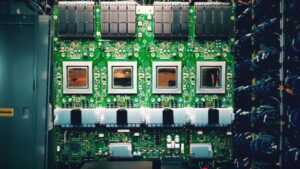

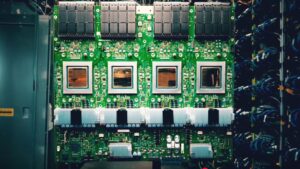

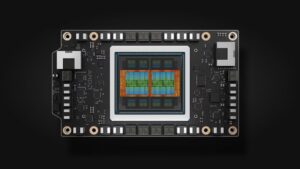

Google introduced its seventh‑generation Tensor Processing Unit, dubbed Ironwood, at a recent Hot Chips event. The dual‑die chip delivers 4,614 TFLOPs of FP8 performance and pairs each die with eight stacks of HBM3e, providing 192 GB of memory per chip. When scaled to a 9,216‑chip pod, the system reaches 1.77 PB of directly addressable memory—the largest shared‑memory configuration ever recorded for a supercomputer. The architecture includes advanced reliability features, liquid‑cooling infrastructure, and AI‑assisted design optimizations, and is already being deployed in Google Cloud data centers for large‑scale inference workloads.

Leia mais →